The set of disciplines, technologies, and business models used to render IT capabilities as on-demand services.The term cloud has been used historically as a metaphor for the Internet. This usage was originally derived from its common depiction in network diagrams as an outline of a cloud, used to represent the transport of data across carrier backbones (which owned the cloud) to an endpoint location on the other side of the cloud.

The Emergence of Cloud Computing:

Utility computing can be defined as the provision of computational and storage resources as a metered service, similar to those provided by a traditional public utility company. This, of course, is not a new idea. This form of computing is growing in popularity, however, as companies have begun to extend the model to a cloud computing paradigm providing virtual servers that IT departments and users can access on demand. Early enterprise adopters used utility computing mainly for non-mission-critical needs, but that is quickly changing as trust and reliability issues are resolved.

Some people think cloud computing is the next big thing in the world of IT. Others believe it is just another variation of the utility computing model that has been repackaged in this decade as something new and cool. However, it is not just the buzzword “cloud computing” that is causing confusion among the masses. Currently, with so few cloud computing vendors actually practicing this form of technology and also almost every analyst from every research organization in the country defining the term differently, the meaning of the term has become very nebulous. While these definitions are succinctly accurate, listing common characteristics found in many cloud computing services will provide scope to the definition and aid in comprehension. These common characteristics include:

- Shared infrastructure: As a part of doing business, cloud providers invest in and build the infrastructure necessary to offer software, platforms, or infrastructure as a service to multiple consumers. The infrastructure—and environment necessary to house it—represents a large capital expense and ongoing operational expense that the provider must recoup before making a profit. As a result, consumers should be aware that service providers have a financial incentive to leverage the infrastructure across as many consumers as possible.

- On-demand self-service: On-demand self-service is the cloud customer’s (i.e., consumer) ability to purchase and use cloud services as the need arises. In some cases, cloud vendors provide an application programming interface (API) that enables the consumer to programmatically (or automatically through a management application) consume a service.

- Elastic and scalable: From a consumer point of view, cloud computing’s ability to quickly provision and deprovision IT services creates an elastic, scalable IT resource. Consumers pay for only the IT services they use. Although no IT service is infinitely scalable, the cloud service provider’s ability to meet consumer’s IT needs creates the perception that the service is infinitely scalable and increases its value.

- Consumption-based pricing model: Providers charge the consumer per amount of service consumed. For example, cloud vendors may charge for the service by the hour or gigabytes stored per month.

- Dynamic and virtualized: The need to leverage the infrastructure across as many consumers as possible typically drives cloud vendors to create a more agile and efficient infrastructure that can move consumer workloads, lower overhead, and increase service quality. Many vendors choose server virtualization to create this dynamic infrastructure.

- Public cloud: An IT capability as a service that cloud providers offer to any consumer over the public Internet. Examples: Saleforce.com, Google App Engine, Microsoft Azure, and Amazon EC2.

- Private cloud: An IT capability as a service that cloud providers offer to a select group of consumers. The cloud service provider may be an internal IT organization (i.e., the same organization as the consumer) or a third party. The network used to offer the service may be the public Internet or a private network, but service access is restricted to authorized consumers.Example: Hospitals or universities that band together to purchase infrastructure and build cloud services for their private consumption.

- Internal cloud: A subset of a private cloud, an internal cloud is an IT capability offered as a service by an IT organization to its business. For example, IT organizations building highly virtualized environments can become infrastructure providers to internal application developers. In a typical IT organization, application developers are required to work through the IT infrastructure operations team to procure and provision the development and production application platform (e.g., hardware, OS, and middleware) necessary to house a new application. In this model, the infrastructure team provides cloud-like IT infrastructure to the application development team (or any other IT team) thereby allowing it to provision its own application platform.

- External cloud: An IT capability offered as a service to a business that is not hosted by its own IT organization. An external cloud can be public or private, but must be implemented by a third party.

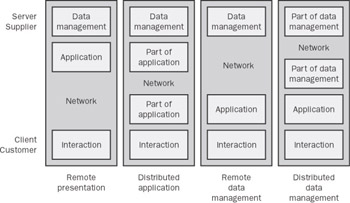

In addition to the definitions, architectural diagrams can further clarify cloud computing as well as classify vendor offerings.

Figure 1: Cloud Computing Tiered Architecture

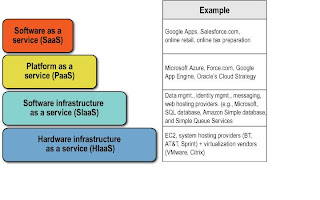

Figure 1: Cloud Computing Tiered ArchitectureThe “stair-step” layering of cloud service tiers serves two purposes. First, the stair-step effect illustrates that IT organizations are not restricted to engage the cloud through the SaaS layer. IT organizations will interface cloud computing at multiple layers, utilizing either a turnkey SaaS solution or other cloud layers to complete an internally created solution. For example, an IT organization may utilize a hardware infrastructure as a service (HIaaS) provider to run a custom built application and utilize a SaaS provider, such as Salesforce.com, for customer relationship management (CRM).

The second purpose of the layered cloud services model is to illustrate that cloud services can build on one another. For example, IBM Websphere sMash (PaaS) utilizes Amazon’s EC2 (HIaaS). This is not to say that higher-tier cloud services must be built on lower tiers. For example, Microsoft’s first version of Azure is not built on another cloud service. However, the potential for cloud layering exists, and IT organizations need to be aware when a cloud service is layered on another service.

Software as a Service(SaaS)

Software as a service (SaaS) is when a vendor designs the application and hosts it so that users can access the application through a web browser or a rich Internet application (RIA) mechanism such as Adobe Air or Microsoft Silverlight. In the early days of SaaS (i.e., the mid-1990’s), vendors who delivered applications via browsers were called application service providers (ASPs). Over time, the accepted term has changed to SaaS. Because the vendor designs, hosts, and supports the application, SaaS differs from hosting, which is when the enterprise buys the software license from a vendor and then hires a third party to run the application.

The markets served by SaaS have evolved over time. In the 1990’s, SaaS solutions often targeted departmental needs (e.g., human resources and web analytics) or a non-core service (e.g., web conferencing). That way, if the SaaS solution went down, the entire company did not grind to a halt. Today, SaaS solutions are available for a much wider swath of needs, including business intelligence, document sharing, e-mail, office productivity suites, sales force automation (SFA), web analytics, and web conferencing. A relatively recent change in the market is that major vendors such as Cisco Systems, Google, IBM, Microsoft, and Oracle now offer SaaS solutions. In the past, SaaS was the province of startups.

A sampling of SaaS application segments and affiliated vendors:

• Document sharing: Adobe, IBM Lotus, Google, and Microsoft

• E-mail: Cisco, Google, Microsoft, and Yahoo!

• Office productivity suites: Google, ThinkFree, and Zoho

• Sales force and customer management: NetSuite, Oracle, and Salesforce.com

• Web analytics: Coremetrics, Omniture, and WebTrends

• Web conferencing: Cisco Systems, IBM Lotus, and Microsoft

Platform as a Service(PaaS)

A level below SaaS, platform as a service (PaaS) is an externally managed application platform for building and operating applications and services. Like any application platform, a PaaS environment supplies development and runtime frameworks to support presentation, business logic, data access, and communication capabilities. The PaaS environment must also supply supporting infrastructure capabilities, such as authentication, authorization, session management, transaction integrity, reliability, availability, and scalability.

Also, a PaaS environment typically provides development tools for working with the supplied frameworks. Applications and services developed using these frameworks may be expressed as executable code, scripts, or metadata. In some cases, the development tools are hosted by the PaaS provider (particularly when applications are expressed as scripts or metadata). In other cases, the development tools are supplied as downloadable integrated development environments (IDEs) that can be integrated with the organization’s traditional software development lifecycle (SDLC) infrastructure.

We can place the PaaS vendors into five categories:

- Java application platform vendors: Java application vendors package their traditional application platform middleware for delivery on Amazon EC2. Examples: IBM, Red Hat, Oracle, and SpringSource.

- Microsoft: Microsoft is collecting a set of software infrastructure services such as .NET Services and Structured Query Language (SQL) Services, running in elastic operating environment entitled Windows Azure. The combined platform is called Windows Azure Platform Services.

- Emerging proprietary contenders: Emerging platform contenders provide rapid application development capabilities through creation and delivery of new proprietary development and runtime environments. Examples: Salesforce.com and Google App Engine

- Niche vendors: Niche framework vendors provide specialized application platforms as services. Examples: GigaSpaces Technologies (extreme transactions), Appian Anywhere (business process management [BPM]), and Ning (social networking)

- Startup vendors: Startup PaaS vendors are platform vendors who only offer the platform online. Examples: LongJump and Bungee Labs

Software infrastructure as a service (SIaaS) is a stand-alone cloud service that provides a specific application support capability, but not the entire application software platform service (otherwise, it would be PaaS). For example, Microsoft SQL Data Services is a SIaaS offering. Although SQL Data Services is included in Azure, it is also available as a stand-alone infrastructure service.

The intended consumers of SIaaS offerings are software developers who want to create an application that does not have dependencies on internal infrastructure components, which can be too expensive to license, slow to deploy, and complex to maintain and support. Thus, SIaaS is not a user-consumable cloud service and, by definition, must be subsumed by applications or higher-tier cloud services (e.g., SaaS or PaaS). Other examples of SIaaS include:

- Data management services: Amazon Simple DB and Microsoft SQL Data Services

- Messaging services: Amazon Simple Queue Service

- Integration services: Cast Iron Systems and Workday

- Content distribution: Akamai and Amazon CloudFront

Hardware infrastructure as a service (HIaaS) is a virtual or physical hardware resource offered as a service. HIaaS can be implemented in many ways, but most implementations utilize server virtualization as an underlying technology to increase infrastructure utilization (important to HIaaS providers), workload mobility, and provisioning. Many HIaaS providers also use grid and clustering technology to increase scalability and availability.

HIaaS vendors are divided into two categories: the “enablers” and the “providers.” HIaaS enablers are software vendors who develop virtualization software that is used to create hardware infrastructure services. Enabler examples include VMware (vCloud), Citrix Systems (Citrix Cloud Center [C3]), and 3Tera (AppLogic).

HIaaS providers are cloud vendors who utilize an enabling vendor’s technology to create a service. Provider examples include Amazon (EC2 and S3) and Rackspace. AT&T, T-Mobile, and CDW are examples of hosting providers that are planning to provide HIaaS using VMware’s vCloud product.

Cloud Usage Models:

The cloud tiered architecture model provides a basis to discuss cloud computing usage models and to define additional terms:

- Service provider: The organization providing the cloud service. Also known as “cloud service provider” or “cloud provider.” Service providers may offer a cloud service to a select group (private cloud) or to the general public (public cloud).

- Service consumer: The person or organization that is using the cloud service. The consumer may access a public or private cloud using the public Internet or a private network.

- Service procurer: The person or organization obtaining the service on behalf of the consumer.

Although the cloud presents tremendous opportunity and value for organizations, the

usual IT requirements (security, integration, and so forth) still apply. In addition, some

new issues come about because of the multi-tenant nature (information from multiple

companies may reside on the same physical hardware) of cloud computing, the merger

of applications and data, and the fact that a company’s workloads might reside outside

of their physical on-premise datacenter. This section examines five main challenges

that cloud computing must address in order to deliver on its promise.

Security

Many organizations are uncomfortable with the idea of storing their data and applications on systems they do not control. Migrating workloads to a shared infrastructure increases the potential for unauthorized access and exposure. Consistency around authentication, identity management, compliance, and accesstechnologies will become increasingly important. To reassure their customers, cloud providers must offer a high degree of transparency into their operations.

Data and Application Interoperability

It is important that both data and applications systems expose standard interfaces. Organizations will want the flexibility to create new solutions enabled by data and applications that interoperate with each other regardless of where they reside (public clouds, private clouds that reside within an organization’s firewall, traditional IT environments or some combination). Cloud providers need to support interoperability standards so that organizations can combine any cloud provider’s capabilities into their solutions.

Data and Application Portability

Without standards, the ability to bring systems back in-house or choose another cloud provider will be limited by proprietary interfaces. Once an organization builds or ports a system to use a cloud provider’s offerings, bringing that system back in-house will be difficult and expensive.

Governance and Management

As IT departments introduce cloud solutions in the context of their traditional datacenter, new challenges arise. Standardized mechanisms for dealing with lifecycle management, licensing, and chargeback for shared cloud infrastructure are just some of the management and governance issues cloud providers must work together to resolve.

Metering and Monitoring

Business leaders will want to use multiple cloud providers in their IT solutions and will need to monitor system performance across these solutions. Providers must supply consistent formats to monitor cloud applications and service performance and make them compatible with existing monitoring systems. It is clear that the opportunity for those who effectively utilize cloud computing in their organizations is great. However, these opportunities are not without risks and barriers. It is our belief that the value of cloud computing can be fully realized only when cloud

providers ensure that the cloud is open.

Resources in the cloud:

Virtualization. The notion of cloud computing as an application of "virtualization" is true but also misleading. Most people associate virtualization with the notion of server virtualization and virtual machines, and while these are components cloud computing, the cloud computing concept requires a much broader virtualization view. A cloud computing complex appears as a single abstract resource that can support any application or application component, and when the application is needed (because someone runs it on the virtual computer representing the cloud) it is assigned specific resources from the pool available. In cloud computing, the most significant question is the flexibility of this "resource brokerage" process, because constraints on how resources are assigned have a major effect on overall resource utilization and thus the benefit that cloud computing can offer.

Storage resources. The most significant question in efficiency and resource utilization is likely to be the location of the database supporting the application. If storage and servers are to be allocated separately for optimum utilization, rather than as a unit, storage network performance is critical. This means that it is difficult to allocate storage resources across a wide area network (WAN) connection, and some cloud computing software provides a distributed cloud-optimized database management system (DBMS) to facilitate data virtualization and improve performance overall. Most cloud computing resource brokerage techniques would locate the application server and application storage in the same data center, where storage area network (SAN) connections can be used. This technique is also the norm for private clouds, where enterprises tend to have several cloud data centers with server farms and large SANs. This is why data center networking and SANs are critical parts of nearly all cloud computing architecture -- both on the vendor and the user side. In private cloud applications, these issues are controllable.

Databases. Some public cloud applications may involve "data crunching" of databases. Depending on the size of the database and the percentage of items being accessed by the cloud application, it may be smart to load the needed data onto the cloud in one step and then access it there. The process of uploading data to the cloud is a batch function, but the process of accessing data is interactive, and storage delays accumulate with the number of database accesses performed.

Justifying cloud computing: Outsourcing resources:

Meeting variable IT demand. The near-term impetus for cloud computing services comes from the variation of IT demand according to business cycles and in response to unexpected events. In-house computing resources are normally maintained at a level sufficient to ensure that the IT needs of line departments can be met. The level of resources needed to meet those needs depends on a combination of the total resource requirements, the extent these needs vary in an uncontrolled way because of short-term project demands, and the speed with which new resources can be added. Often the variability of demand forces enterprises to create an oversupply of IT resources to carry through peak load periods. These periods can be periodic (quarterly earnings cycles) or episodic, and in many cases, some of the applications could be outsourced to cloud computing services.

Reducing in-house capacity. It's fairly easy to see whether cloud computing services can reduce the cost of sustaining capacity reserves against peak requirements; an audit of the level of utilization of critical resources over time will normally show the range of variability. That lets organizations estimate the amount of cloud services needed to provide reserve capacity and the cost of those resources. This can be compared with the cost of sustaining excess resource capacity in-house. Generally, the more variable the demand on IT, the more savings can be generated by offloading peak demand into the cloud. But it's also true that large enterprises that achieve good resource economies internally are likely to save less than smaller ones.

Creating operational efficiencies.Operations efficiencies for cloud computing are based on the presumption of support economy of scale, meaning that a support team managing a large cloud data center or a series of data centers is more efficient than one that manages a smaller set of resources. The corollary to this is that larger enterprises are likely to achieve good enough economies of scale with private resources, particularly private cloud computing, to reduce this benefit. That means cloud computing services are most likely to be economical for smaller organizations.